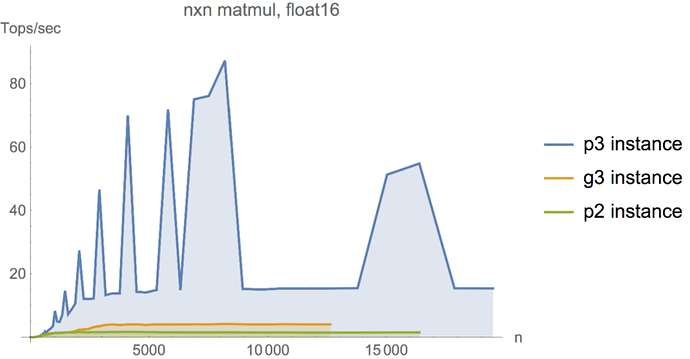

I’m getting highly non-uniform performance for float16 matmul on P3 using recommended NVidia container. I was told by Tom Reed at GTC that this is not expected so maybe someone could redirect this to proper channel:

To reproduce, run the following on Volta machine.

wget https://raw.githubusercontent.com/yaroslavvb/stuff/master/matmul_benchmark_seq.py

export TF_CPP_MIN_LOG_LEVEL=1

python matmul_benchmark_seq.py --dtype=float16

You’ll see something like this.

7512,76.0847702634

8192,87.2323633474

8933,15.2443599021

9741,15.0255254543

This means that it got 87 Tops/second for 8192x8192 matmul, followed by 15 T ops/second for 8933.

For more graphs, see Peak performance of Amazon P3 instances | by Yaroslav Bulatov | Medium

For more details, I used Amazon Ubuntu CUDA 9 AMI – AWS Marketplace: Deep Learning AMI with Source Code (CUDA 9, Ubuntu)

Then used AWS instructions to optimize for GPUs

Then used nvidia docker with official TensorFlow container.

sudo nvidia-persistenced

sudo nvidia-smi -ac 877,1530 # p3

sudo apt-get install apt-transport-https ca-certificates curl software-properties-common

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo apt-key add -

sudo add-apt-repository "deb [arch=amd64] https://download.docker.com/linux/ubuntu xenial stable"

sudo apt-get update

apt-cache search docker-ce

sudo apt-get install -y docker-ce

wget https://github.com/NVIDIA/nvidia-docker/releases/download/v1.0.1/nvidia-docker_1.0.1-1_amd64.deb

sudo dpkg -i nvidia-docker_1.0.1-1_amd64.deb

sudo docker login nvcr.io

sudo docker pull nvcr.io/nvidia/tensorflow:17.10

sudo nvidia-docker run -it --shm-size=1g --ulimit memlock=-1 --ulimit stack=67108864 --rm -v /home/ubuntu/docker:/data/mnist nvcr.io/nvidia/tensorflow:17.10

wget https://raw.githubusercontent.com/yaroslavvb/stuff/master/matmul_benchmark_seq.py

export TF_CPP_MIN_LOG_LEVEL=1

export CUDA_VISIBLE_DEVICES=0

python matmul_benchmark_seq.py --dtype=float16